I jumped on the smart glasses rocketship, thanks to the Sulava Gulf who decided to gift to us all employees with smart glasses to embrace the AI-Native work! About two weeks, one conference in Paris, visit to our office at Dubai, and countless “Hey Meta…” moments later, here’s my take.

Before continuing, here are key tech specs for Ray-Ban Meta Wayfarer Smart Glasses

- 12 MP ultra-wide camera for HD photos and video. 3,024 x 4,032 pixels for images, 1,440 x 1,920 pixels @30 FPS for videos.

- Two custom-built open-ear speakers and a 5-mic array with Bluetooth connectivity

- 32 GB flash storage (500+ photos, 100+ 30s videos)

- Live Streaming

- Up to 36 hours battery life with fully charged case; up to 4 hours on a single charge. Up to 30 minutes of live streaming.

No AR? No Problem!

“Will I end up looking at some tiny hologram?”

That was my first concern. I’m at that age where near‑focus vision isn’t what it used to be. To my relief, the Wayfarers are completely display‑free: no mini‑screens, no floating widgets, and nothing to strain my eyes. Everything happens through voice commands or a quick tap on the temple, leaving my vision untouched and my hands free. After using glasses for some time, this is how it should be – having a natural conversation with the AI.

From a distance they could pass for any regular pair of Ray‑Bans: classic shape, familiar finish. The only giveaways are tiny cameras lens near the hinge and a slightly thicker temple where the electronics hide. That understatement is perfect for me: they look good great for everyday wear, nothing flashy, and most people won’t notice they’re “smart” unless pointed out.

Simplicity matters when you’re already juggling coffee, laptop bag and conference badge.

Photos & Video on Tap

Imagine this: keynote speaker drops a killer slide, everyone scrambles for their phones – I just tap the button in glasses, click, and the slide is saved from my eye‑level angle. Sure, sporting sunglasses in a dim keynote hall feels a bit odd, but those puzzled looks can’t compare for the slide capture.

Video is also extremely useful. Walking outside and seeing something you want record? Long‑press the button, and video is recording. Think about walking at expo, following the event, or just capturing moments & feelings?

A few practical notes:

- LED indicator does its privacy job. People notice the light and may then know I’m recording.

- Audio capture is surprisingly rich thanks to the five‑mic array as it focuses on your voice more than to the chatter.

All of that without whipping out a phone or fumbling for the record button. Very useful!

Field testing

Paris, June 2025. I was at the Modern Workplace Conference Paris 2025 surrounded by rapid‑fire French. Flip the glasses into Live Translation mode, and after a brief one‑to‑few‑seconds pause the chatter morphs into English in my ears. The first time it happened I literally mouthed “wow” it felt like a sci‑fi moment.

The good:

- Close‑range clarity. Conversation partners within ~2–3 m? After a brief 1–2 s delay the English voice‑over arrived – impressively good, not flawless, but better than I feared.

- Sign spotting. I tested it on an information sign beside a pond “Hey Meta, translate what I’m looking at” turned the French text into crisp English. Menus were already bilingual, but random signs like this really showed the feature’s value. Unfortunately this doesn’t work for many languages, but French was one of those.

The not‑so‑good:

- Crowd chaos. Multiple voices? Background music? Not so good.

- Language limitations. Right now Meta supports English ↔ French, Italian, Spanish. Anything beyond that and you get your phone out.

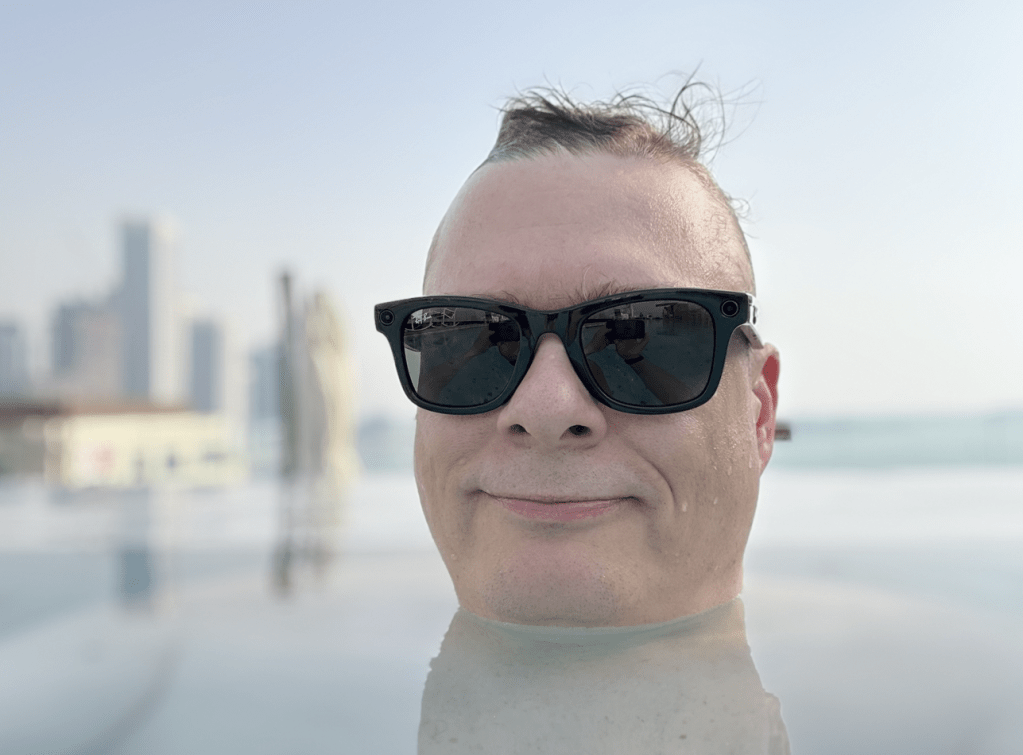

I also gave them good use on my trip to Dubai, as the future (and day) is very bright there! Walking outside and wanting to capture a moment – just click on the button. The same for recording, it is easy and intuitive. I even took them to the pool, when I had a few hours off time, and listened music & took a few pictures. Note: glasses are IPX4 rated, which means they can tolerate splashes but not soaking or immersing glasses in the water! These are not meant to be used during swimming, so I was very careful when using them in water for picture taking.

Bottom line: promising but the scifi future is not there yet. However tech is improving every day, and glasses continue getting updates.

“Hey Meta, What Am I Looking At?”

Armed with curiosity, I have spent some time testing Meta’s object‑recognition. Point at a landmark, ask: “Hey Meta, what am I looking at?”

Sometimes this rocks, and sometimes … it is out of ideas. This is due to no location context. The glasses lack GPS, so the AI must rely solely on camera input and cloud smarts. Give it a famous skyline silhouette and it aces the test; give it something niche and the answer explains I am looking at a statue with certain features.

Still, when it does click, it feels great!

Navigation with Google Maps using Voice UI

Confession: I get lost easily. Pairing the glasses with Google Maps audio guidance was a great feature. AI directs me with voice, my eyes stay on lively scooter traffic, and my phone stays pocketed.

The experience highlights why audio‑first wearables rock:

- No more staring at phone (except occasionally checking).

- Continuous situational awareness.

- Subtle cues – nobody knows I’m being navigated except me.

I only wish I could start the route by voice. For now, I unlock the phone, set the destination, then slide it away.

Conversational Copilot Chats

Instead of typing into Teams on my phone, I fire up Microsoft Copilot through the glasses and start an audio dialogue:

“Copilot, draft three hook sentences about using AI at work.”

If – when! – Microsoft 365 Copilot goes fully voice‑native, imagine chatting about your work content, planning meetings, preparing content to Word PowerPoint while strolling outside without a phone in your hand and looking cool. Of course I can do this with Bluetooth headset too, but glasses are so much cooler on this one.

Prescription or not?

In my haste I chose non‑prescription black lenses. Big mistake? Maybe. Reading and typing on phone requires my real glasses. But the upside: I can pass the Wayfarers around, let colleagues test drive the tech, and spread the smart‑glasses usability examples.

Pro tip: if you need vision correction and plan to make these your daily drivers, bite the bullet and order prescription from day one. Remember, lenses aren’t swappable and can’t be retro-fitted.

What I Still Miss

- Deeper AI context. The assistant should remember my previous question, know I’m staring at a statue, and answer accordingly.

- True spatial awareness. GPS (or phone‑tethered location) plus a bit of computer vision would unlock place‑based info and smarter search.

- Voice‑only everything. From launching navigation to sending WhatsApp voice notes remove the last phone tap.

- Wider language coverage. Arabic, Nordic tongues, Asian languages, you name it. Let’s break the barriers already!

Each of these gaps is a roadmap opportunity. Fingers crossed we’ll see progress in the next firmware update (or the inevitable next-gen hardware).

Conclusion

Are Ray‑Ban Meta Wayfarers perfect? Nope but they’re a thrilling glimpse into a future where voice is the new UI and cameras sit at eye level. For certain tasks like POV content, discreet audio, quick language help, working as handsfree or headset, glasses already beat pulling out a phone.

Give them smarter AI and wider language support, and we’ll smile knowingly at the days we carried slabs of glass in hand. Until then, I’m happily tapping my temple, enjoying the half‑magic, and dreaming of the full‑on sci‑fi version.

What would you do with smart glasses? Let me know in the comments.